Knowledge cutoff dates in LLMs: Why they matter for AI content

Accidentally uncovering knowledge cutoff dates in LLMs

Estimated reading time: 4 minutes

While exploring LLMs to understand the different options and when to use each model, I stumbled upon something called a “Knowledge cutoff date.” Essentially, this refers to the last point in time when a particular model’s training data was updated. Beyond that date, the model won’t be aware of any subsequent events or developments. Knowing a model’s knowledge cutoff is vital, as it affects the accuracy of the information the model can provide—especially when dealing with current events or recent updates. You might think of it as the model’s “publication date,” after which it cannot access or incorporate new knowledge.

Before I dive more into this, let me set the stage for how I stumbled upon this.

The Experiment

While prompting ChatGPT about existing models that it supports today for general availability and its use cases, it created this list for me:

As I was so engrossed in the conversation with ChatGPT and the scenarios we kept coming up with to explain the topic, I continued prompting until I was comfortable with my understanding of said topic. I wrote a post about this and asked ChatGPT to fact-check it. I also had Perplexity fact-check this and then promptly published a post.

However, I had a nagging feeling the following day and decided to re-check my published article. I had mentioned using the “o3” model and “GPT-4o Turbo,” but I remembered only seeing “GPT-4o” listed in the ChatGPT interface. Sure enough, after reviewing the available models under my Plus plan, I realized I’d made a rookie mistake:

In the list that ChatGPT generated, GPT3.5, Gpt-4o Turbo, o1-mini, and o3 no longer exist or are speculative models for the future. ChatGPT had omitted GPT-4o, o3-mini-high, GPT-4o mini, and GPT-4.

I had focused so much on learning from ChatGPT’s responses that I had failed to double-check OpenAI’s official release notes. Although Perplexity had confirmed my post’s accuracy, I hadn’t verified it against official sources.

The Discovery

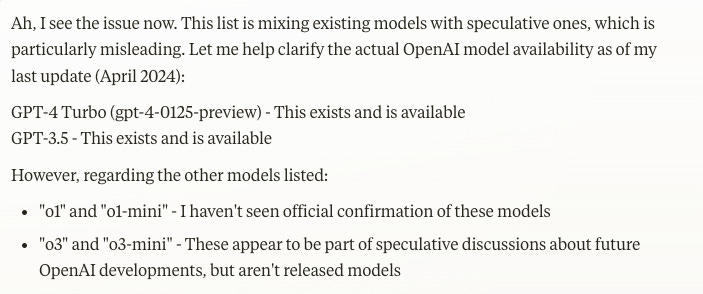

I immediately asked ChatGPT to validate if an o3 model existed today. This was its response:

TLDR: An o3 model exists today but isn’t publicly available.

I checked with Perplexity’s auto mode as well and got:

TLDR: An o3 model exists, but its availability is limited. It is available as part of the "Deep Research” service.

Upon switching to “Reasoning” with Perplexity, I got:

TLDR: o3 was canceled in favor of a more general model, GPT-5, which would be announced soon.

Perplexity’s reasoning mode had pulled the most recent source of information compared to its auto mode and ChatGPT’s GPT-4o.

Enter Claude.

When describing the scenario to Claude, I noticed that it kept stating, “From my knowledge (as of April 2024),” in addition to giving me obsolete or incorrect details about existing models.

Seeing the statement “From my knowledge” made me realize that the information I had been reading directly from ChatGPT was not current. Further investigation is what led to the discovery of the Knowledge cutoff date.

Why is being aware of this date important

Generative AI tools have a cut-off date for their information because gathering, cleaning, and formatting training data takes time. Training large models also requires significant computational resources, so setting a cut-off date helps manage these resources effectively. Additionally, a cut-off date ensures the model can be adequately tested and stabilized without constant changes.

The knowledge cutoff is crucial when working with large language models because it defines the limitations of their understanding and knowledge. If you use a model with an outdated knowledge cutoff, the generated output may lack accuracy and relevance to Current information. For example, if the knowledge cutoff is January 2024, any information or events occurring after that date will not be reflected in the model's output. This can lead to misinformation, inaccuracies, or outdated results.

Knowledge cut-off dates for current models

These are the dates of some of the existing models:

GPT-4o: October 2023 (Bing search integration)

GPT-4o-mini: October 2023 (Bing search integration)

Claude: April 2024

Grok3: March 31st, 2024 (self-reported)

GPT o1: October 2023 (Bing search integration)

Perplexity: Uses real-time search

Gemini: Continuous updates

What’s next

As AI evolves rapidly, it makes sense that LLMs will need guardrails, such as a cutoff date for this phase, to ensure that all required resources are managed effectively. We may eventually see all LLMs seamlessly integrating up-to-the-minute data with high reliability. Perplexity and Gemini already use real-time updates, even if not so accurately, and I suspect others will follow suit. For now, a healthy dose of skepticism and verification remains essential. Whether through structured validation techniques or a simple cross-check, ensuring trust in AI-generated information is ultimately a shared responsibility between users and developers.